Robotic imitation learning (IL) in dynamic environments—where object positions or external forces change unpredictably—poses a major challenge for current state-of-the-art methods. These methods often rely on multi-step, open-loop action execution for temporal consistency, but this approach hinders reactivity and adaptation under dynamic conditions. We propose Noise Augmented Behavior Cloning Transformer (NoisyBCT), a robust and responsive IL method that predicts single-step actions based on a sequence of past image observations. To mitigate the susceptibility to covariate shift that arises from longer observation horizons, NoisyBCT injects adversarial noise into low-dimensional spatial image embeddings during training. This enhances robustness to out-of-distribution states while preserving semantic content. We evaluate NoisyBCT on three simulated manipulation tasks and one real-world task, each featuring dynamic disturbances. NoisyBCT consistently outperforms the vanilla BC Transformer and the state-of-the-art Diffusion Policy across all environments. Our results demonstrate that NoisyBCT enables both temporally consistent and reactive policy learning for dynamic robotic tasks.

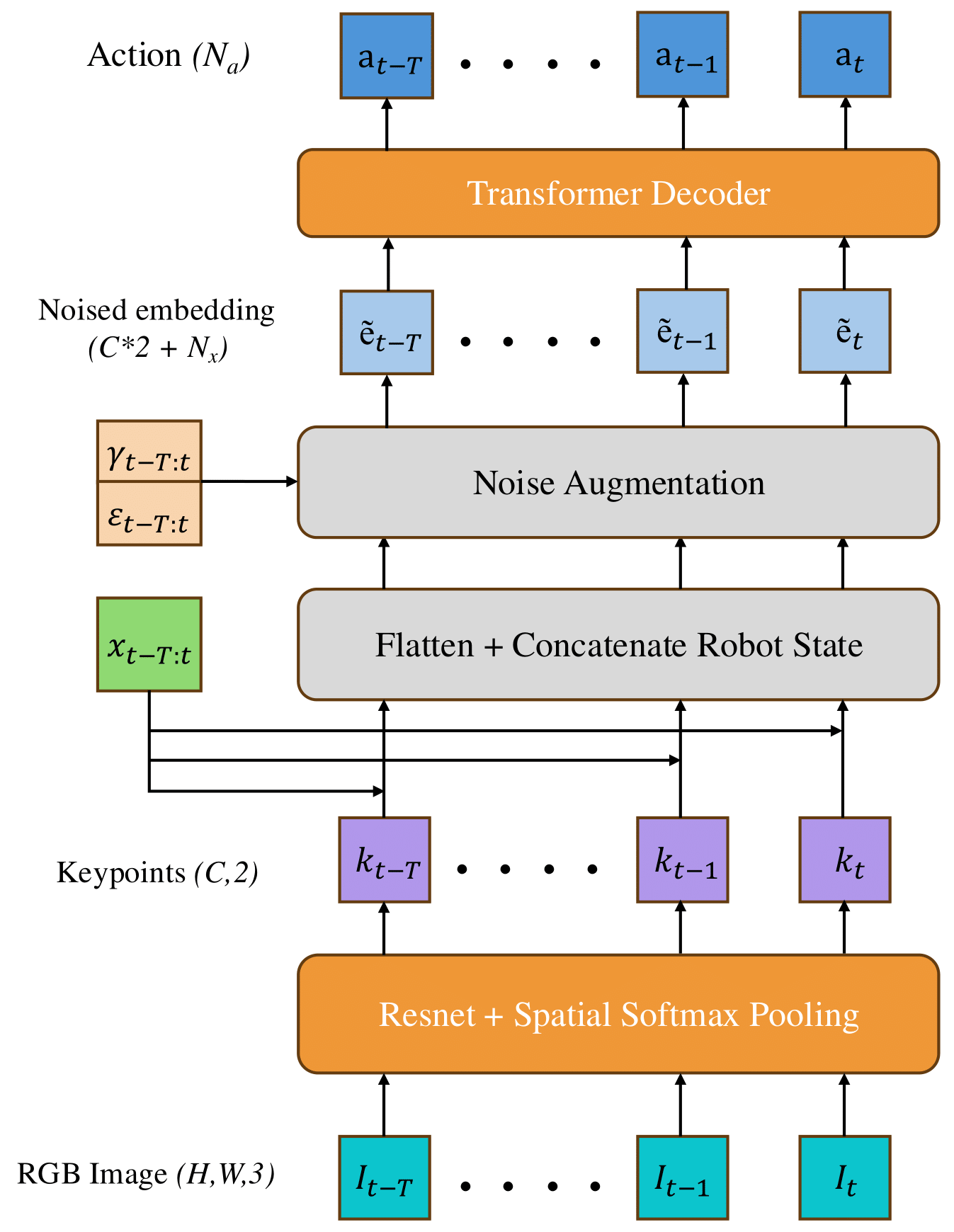

Our proposed approach, NoisyBCT, uses single-step action prediction coupled with a longer observation context, enabling reactive action execution while preserving temporal action consistency. To mitigate the increased risk of covariate shift that comes with extending the context, NoisyBCT applies a carefully designed noise augmentation scheme during training and inference. Crucially, noise perturbation is applied to the low-dimensional, spatial image representations produced by the (Resnet+SpatialSoftmax) vision backbone, encouraging robustness while preserving image semantics and intrinsic structure.

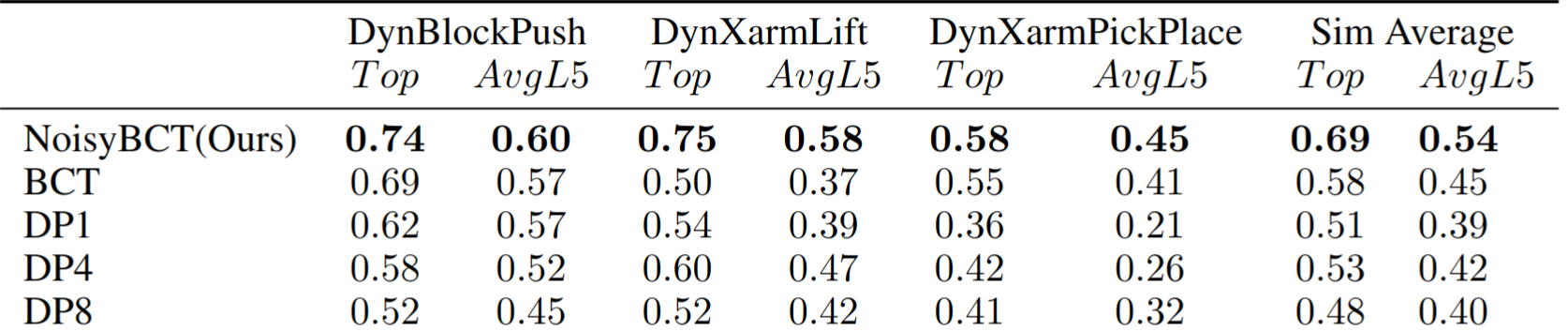

NoisyBCT is evaluated on a set of challenging, dynamic robot tasks. We compare NoisyBCT with the vanilla BC transformer without noise augmentation, and with Diffusion Policy/Consistency Policy with varying action horizons.

NoisyBCT outperforms the baselines across all simulation tasks, exhibiting smooth and reactive control.

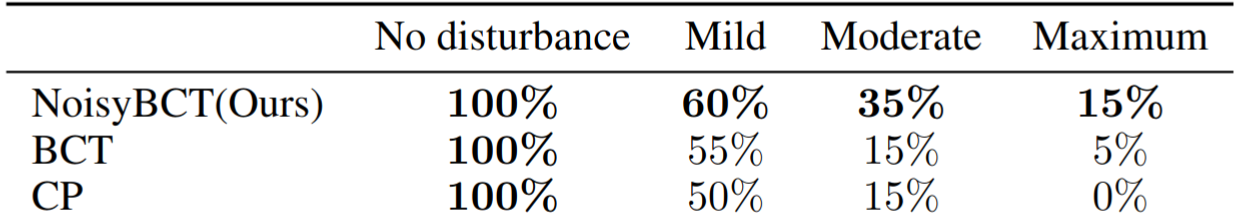

In the dynamic real-world experiment, the agents must place a hanging object in a narrow cup, while compensating for unpredictable actuator drift. NoisyBCT shows improved resilience, and significantly outperforms both vanilla BCT and Consistency Policy.

The code used in the paper can be found at github.com/noisy-bct/NoisyBCT.